CS 5043: HW4: Semantic Labeling

Assignment notes:

- Deadline: Thursday, March 28th @11:59pm.

- Hand-in procedure: submit a zip file to Gradescope

- Do not submit MSWord documents.

Data Set

The Chesapeake Watershed data set is derived from satellite imagery

over all of the US states that are part of the Chesapeake Bay

watershed system. We are using the patches part of the data

set. Each patch is a 256 x 256 image with 26 channels, in which each

pixel corresponds to a 1m x 1m area of space. Some of these

channels are visible light channels (RGB), while others encode surface

reflectivity at different frequencies. In addition, each pixel is

labeled as being one of:

- 0 = no class

- 1 = water

- 2 = tree canopy / forest

- 3 = low vegetation / field

- 4 = barren land

- 5 = impervious (other)

- 6 = impervious (road)

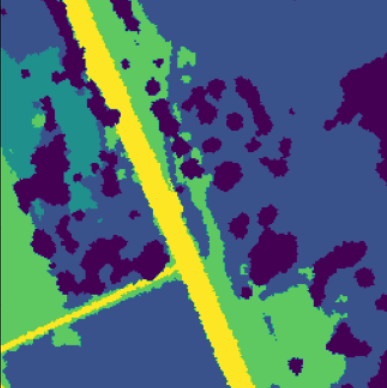

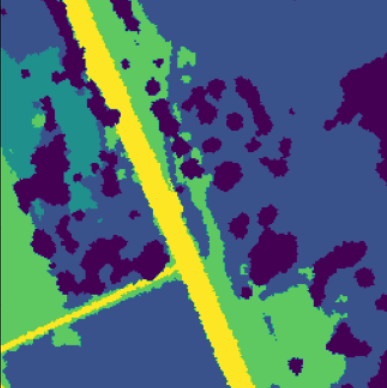

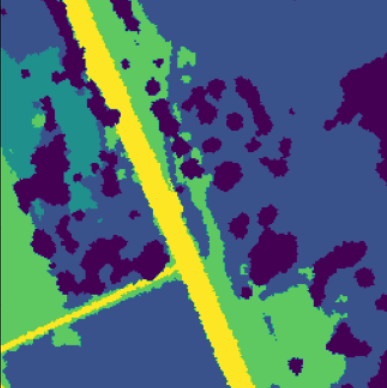

Here is an example of the RGB image of one patch and the corresponding pixel labels:

Notes:

Data Organization

All of the data are located on the supercomputer in:

/home/fagg/datasets/radiant_earth/pa. Within this directory, there are both

train and valid directories. Each of these contain

directories F0 ... F9 (folds 0 to 9). Each training fold is composed

of 5000 patches. Because of the size of the folds, we have provided

code that produces a TF Dataset that dynamically loads the data as you

need it. We will use the train directory to draw our training

and validation sets from, and the valid directory to draw our

testing set from.

Local testing: the file chesapeake_small.zip

contains the data for folds 0 and 9 (it is 6GB compressed).

Data Access

chesapeake_loader.py is provided. The key function call is:

ds_train, ds_valid, ds_test, num_classes = create_datasets(base_dir='/home/fagg/datasets/radiant_earth/pa',

fold=0,

train_filt='*[012345678]',

cache_dir=None,

repeat_train=False,

shuffle_train=None,

batch_size=8,

prefetch=2,

num_parallel_calls=4)

where:

- ds_train, ds_valid, ds_test are TF Dataset objects that load and manage

your data

- num_classes is the number of classes that you are predicting

- base_dir is the main directory for the dataset

- fold is the fold to load (0 ... 9)

- train_filt is a regular expression filter that specifies which

file numbers to include.

- '*0' will load all numbers ending with zero (500 examples).

- '*[01234]' will load all numbers ending with 0,1,2,3 or 4.

- '*' will load all 5000 examples.

- '*[012345678]' is the largest training set you should use

- cache_dir is the cache directory if there is one (empty string ('') if cache to RAM, LSCRATCH location if caching to local SSD)

- repeat_train repeat training set indefinitely. NOTE: you must use this

in combination with an integer value of steps_per_epoch for model.fit() (this

tells model.fit() how many batches to consume from the data set)

- shuffle_train size of the training set shuffle buffer

- batch_size is the size of the batches produced by your

dataset

- prefetch is the number of batches that will be buffered

- num_parallel_calls is the number of threads to use to

create the Dataset

The returned Datasets will generate batches of the specified size of

input/output tuples.

- Inputs: floats: batch_size x 256 x 256 x 26

- Outputs: int8: batch_size x 256 x 256

The Problem

Create an image-to-image translator that does semantic labeling of the

images on a pixel-by-pixel basis.

Details:

- Your network output should be shape (examples, rows, cols,

number of classes), where the sum of all class outputs for a single pixel

is 1 (i.e., we are using a softmax across the last dimension of

your output).

- Use tf.keras.losses.SparseCategoricalCrossentropy() as

your loss function (not the string!). This will properly translate between your

one-output per class per pixel to the outs that have

just one class label for each pixel.

- Use tf.keras.metrics.SparseCategoricalAccuracy() as an

evaluation metric. Because of the class imbalance, a model

that predicts the majority class will have an accuracy of ~0.65

- Try using a sequential-style model, as well as a full U-net model (with skip connections) .... in fact, try writing a single function that produces both types of model.

Deep Learning Experiment

Create two different models:

- A shallow model (could even have no skip connections)

- A deep model

For each model type, perform 5 different experiments:

- Use '*[012345678]' for training (train partition). Note: when debugging, just use '*0'

- The five different experiments will use folds F5 ... F9. Note

that there is no overlap between the folds.

Reporting

- Figures 1a,b: model architectures from plot_model().

- Figure 2a,b: Validation accuracy as a function of training

epoch (5 curves per model).

- Figures 3a,b,c,d,e: for each model, evaluate using the test data set

and generate a confusion matrix. (so, one confusion matrix per

fold)

- Figure 4: bar chart of test accuracy (10 bars). Use a

different color for the shallow and deep networks

- Figures 5a,b: for both models, show ten interesting examples (one per row).

Each row includes three columns: Satellite image (channels 0,1,2); true

labels; predicted labels.

plt.imshow can be useful here, but make sure for the label

images that the label-to-color mapping is the same

plt.imshow will also handle images with one int per pixel. In

this case, it will a unique color for each integer value. Make

sure to use the vmax=6 argument.

- Reflection

- How do the training times compare between the two model types?

- Describe the relative performance of the two model types.

- Describe any qualitative differences between the outputs of the two model types.

What to Hand In

- Your python code (.py) and any notebook files (.ipynb)

- Figures 1-5

- Your reflection

Grades

- 20 pts: Clean, general code for model building (including in-code documentation)

- 5 pts: Figure 1a,b

- 10 pts: Figure 2a,b

- 5 pts each: Figures 3a,b,c,d,e

- 10 pts: Figure 4

- 10 pts: Figures 5a,b

- 10 pts: Reflection

- 10 pts: Reasonable test set performance for all deep rotations (.93

or better)

References

Hints

- Start small. Get the architecture working before throwing lots

of data at it.

- Write generic code.

- Start early. Expect the learning process for these models to

be relatively long.

- Caching the TF Datasets to RAM works fine here. Specify the

empty string as the cache option when creating the datasets.

- Batch size of 64 works for 80GB GPUs

- Be prepared to train for hundreds of epochs (with 100 steps_per_epoch)

- To monitor GPU utilization in WandB: look for the System

section and the figure Process GPU Utilization

andrewhfagg -- gmail.com

Last modified: Sun Mar 17 22:26:23 2024